NEW: BrowserGrow.com is now available!

AI agents to grow your business & do your marketing on autopilot in your browser

NEW: BrowserGrow.com is now available!

AI agents to grow your business & do your marketing on autopilot in your browser

NEW: BrowserGrow.com is now available!

AI agents to grow your business & do your marketing on autopilot in your browser

NEW: BrowserGrow.com is now available!

AI agents to grow your business & do your marketing on autopilot in your browser

We’ve all been there. You’re driving, or maybe in the shower, and a brilliant melody lands in your head. You hum it into your voice memos, promising to figure out the chords later. But when you sit down at your computer, the magic fades. You struggle to translate that hummed audio into playable notes.

For decades, this "translation gap" was the biggest hurdle for non-classically trained musicians.

But in 2024, the script has flipped. The music industry is undergoing a tectonic shift, driven not just by new synthesizers, but by Artificial Intelligence. We aren't just talking about robots writing pop songs; we are talking about tools that act as a bridge between raw audio and editable data.

Today, we’re diving deep into how smart data is fueling this revolution, specifically looking at how tools like MusicArt ( are democratizing music production through features like Audio to MIDI conversion, and how savvy marketers are using this tech to save thousands of dollars.

To understand how we got here, we have to look under the hood. AI doesn't "hear" music the way we do; it processes it as data.

The leap from simple synthesizers to intelligent composition tools is powered by Smart Data. In the context of AI music, this refers to massive datasets where raw audio waveforms are paired with their metadata (pitch, duration, timber, and genre).

According to a 2023 report by Market.us, the Generative AI in Music market is projected to reach $2.6 billion by 2032, growing at a CAGR of 28.6%. This explosion is fueled by machine learning models (like Neural Networks) that have ingested millions of hours of music.

When an AI analyzes a track, it isn't just listening; it is breaking down the spectrogram. It learns that a specific frequency spike at a certain time corresponds to a "Middle C" on a piano.

Pattern Recognition: The AI identifies chord progressions that statistically follow one another.

Timbre Analysis: It distinguishes between a violin and a synthesizer based on harmonic overtones.

This "Smart Data" infrastructure is what allows a platform to not only generate music but to deconstruct it—taking a finished song and breaking it back down into its building blocks.

The modern AI music tool is no longer a gimmick; it is a co-pilot.

In the past, music production required knowledge of music theory and complex Digital Audio Workstations (DAWs). Today, AI tools serve as an "exoskeleton" for creativity. They handle the heavy lifting—mixing, mastering, and transcription—allowing creators to focus on the "vibe."

Leading producers are citing a 30-40% increase in workflow speed when utilizing AI assistants for administrative tasks, such as tagging samples or identifying keys. The role of the tool is to remove friction. If you have a sample loop but don't know the notes, AI solves that instantly, allowing you to layer new instruments on top without guessing.

One of the most powerful applications of this technology is Audio-to-MIDI conversion. MIDI (Musical Instrument Digital Interface) is the DNA of digital music—it’s not the sound itself, but the instruction (which note, how loud, how long).

Transforming a raw audio file (WAV/MP3) into MIDI used to be a nightmare of manual transcription. Let's look at how MusicArt streamlines this process.

You have a recording of a piano solo or a complex guitar riff, and you want to change the instrument to a synthesizer. You can't do that with the audio file. You need the MIDI notes.

Step 1: The Upload

Navigate to the MusicArt platform. The interface is designed for speed. You simply drag and drop your audio file into the converter. Whether it’s a rough voice memo of you humming or a polished piano sample, the "Smart Data" engine accepts it.

Step 2: The Analysis

This is where the magic happens. MusicArt’s algorithms analyze the polyphony (multiple notes played at once). Unlike early 2000s software that could only detect one note at a time (monophonic), modern AI can disentangle a chord, separating the root note from the 3rd and the 7th.

Step 3: Conversion and Export

Within seconds, the audio is converted into a .mid file.

The Result: You don't get a sound file; you get the notes.

The Application: You download this MIDI file and drag it into your DAW (like Ableton, Logic, or FL Studio).

Step 4: The Remix

Now that you have the MIDI data, you can assign any sound to it. That piano melody you hummed? You can now play it back through a violin, a distorted bass, or a vintage synthesizer. You have effectively separated the composition from the performance.

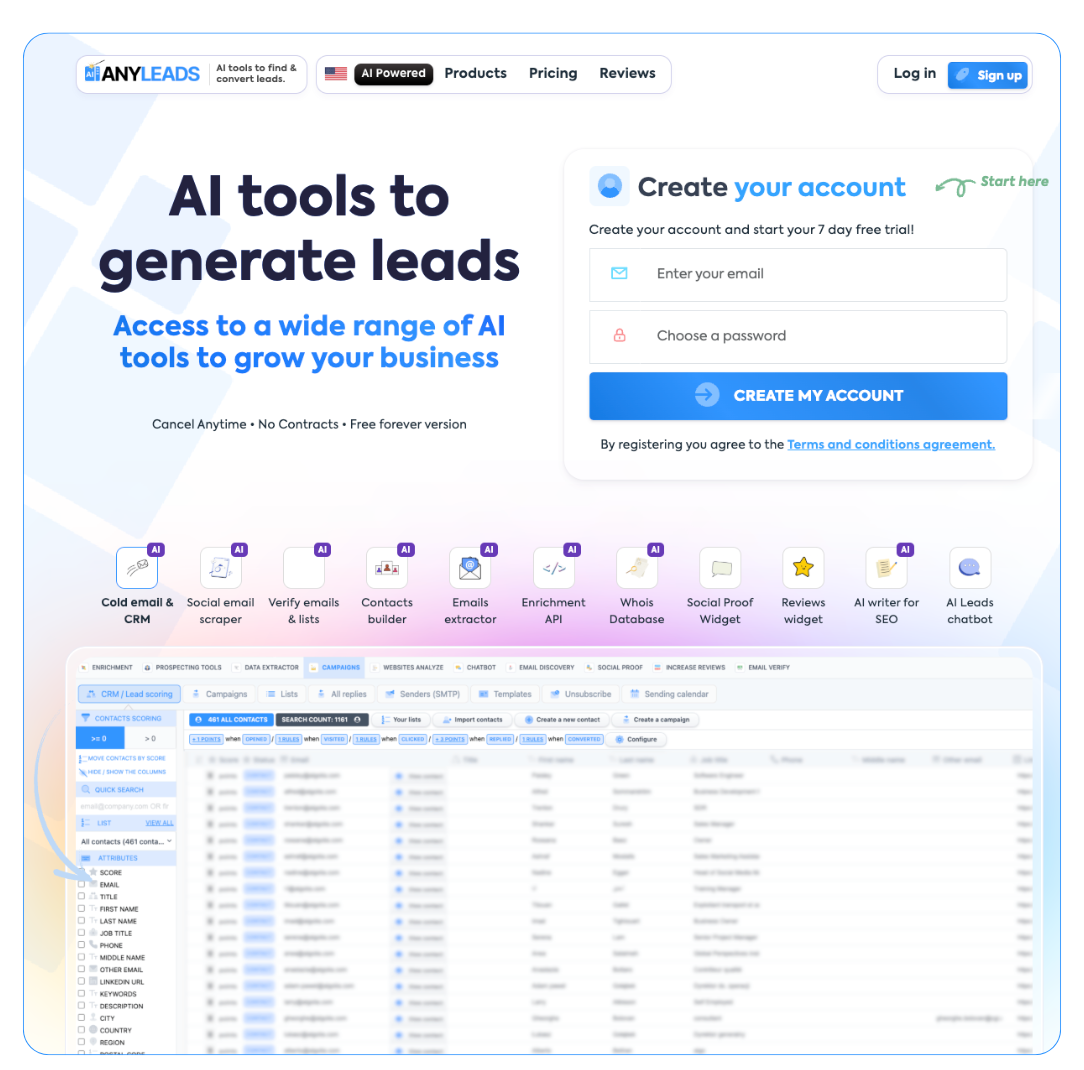

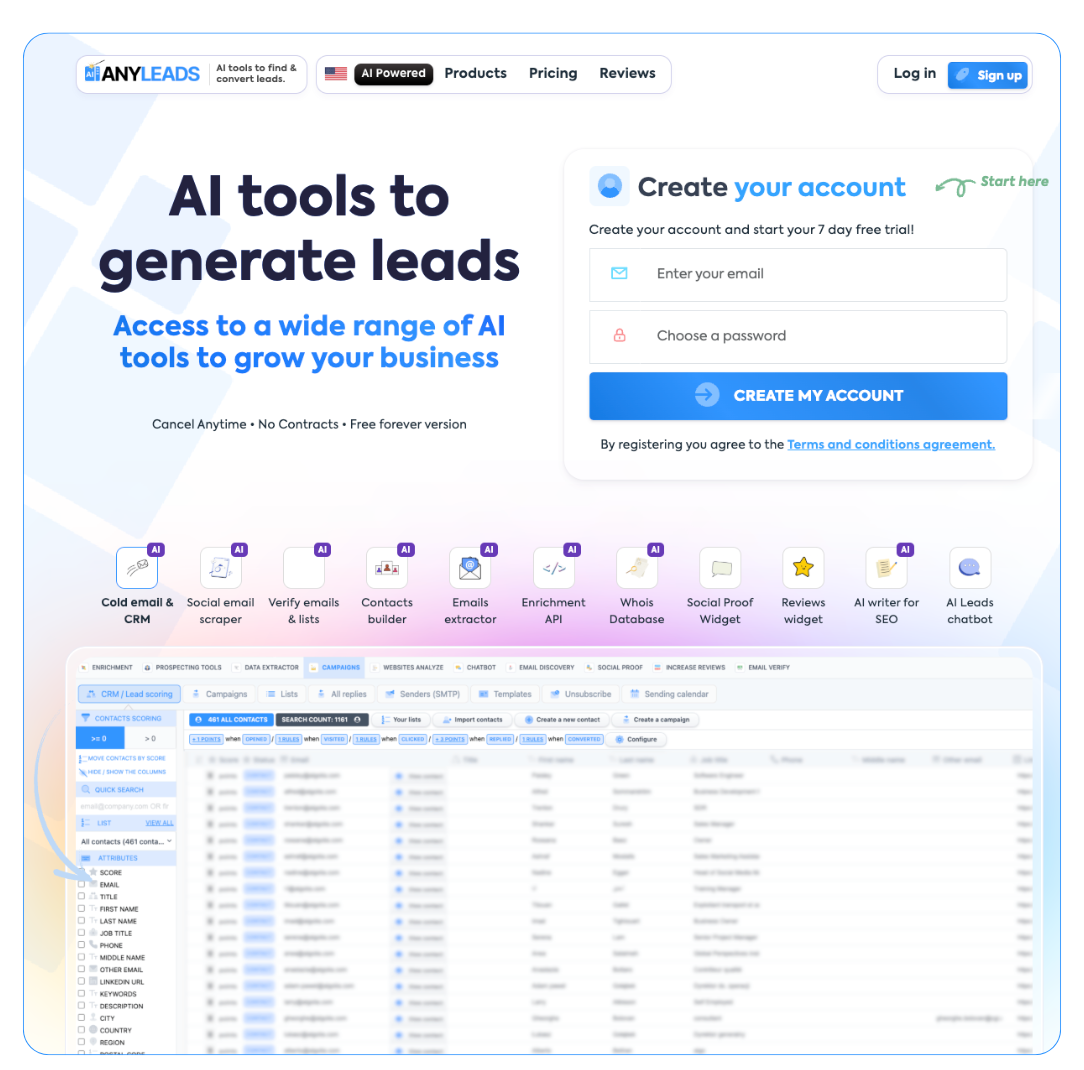

The implications of this technology extend far beyond the bedroom producer. Marketing agencies and content creators are adopting AI music tools at an unprecedented rate.

For brand managers, music licensing is a headache. Using a popular song can cost five figures; using "stock" music often sounds generic.

Case Study: A mid-sized digital agency recently reported cutting their audio licensing budget by 60% by switching to AI-assisted generation for social media background tracks.

Imagine a video campaign that needs a 15-second intro.

Traditional Method: Search stock libraries for hours to find a track that fits the timing.

AI Method: Upload a reference track (a "temp track") to a tool like MusicArt, convert the structure to MIDI, and generate a new, original piece that matches the exact tempo and mood of the reference—without infringing copyright.

Brands are using AI to generate infinite variations of their sonic logo. By having MIDI data of their jingle, they can instantly adapt their brand sound to fit a sad commercial, an upbeat TikTok, or a relaxing tutorial video, maintaining brand consistency while adapting to the context.

We are currently in the "adoption phase" of AI music. What does the "maturity phase" look like?

Real-Time Adaptation: We predict the rise of adaptive audio in gaming and fitness apps, where the music changes in real-time based on the user's heart rate or game intensity, generated on the fly by AI.

Stem Separation 2.0: Audio-to-MIDI will evolve to handle full orchestral tracks, perfectly isolating every instrument into separate MIDI channels from a single mixed MP3.

Collaborative AI: Instead of just a tool, AI will act as a bandmate, improvising MIDI responses to your playing in live settings.

The integration of Smart Data into music production is not about robots replacing humans. It is about removing the technical shackles that keep great ideas from becoming great tracks.

Tools like MusicArt are pioneering this shift by making complex processes—like Audio-to-MIDI conversion—accessible to everyone. Whether you are a musician trying to decipher a jazz chord progression or a marketer looking for the perfect, royalty-free beat, the barrier to entry has never been lower.

The future of music isn't just about listening; it's about interacting with the data behind the sound. The question is no longer "Can I make music?" but rather, "What do I want to create today?"